Originally posted on 11/2/2021 by The Millennial Source, written by Phoebe Woofter and edited by Christine Dulion.

Creators of science fiction narratives in books, movies and television often depict artificial intelligence (AI) as a threat to human autonomy. Once robots attain consciousness, what is to stop them from overthrowing us as a species? Our fear of our technological future reigns in regards to mainstream conversations about artificial intelligence.

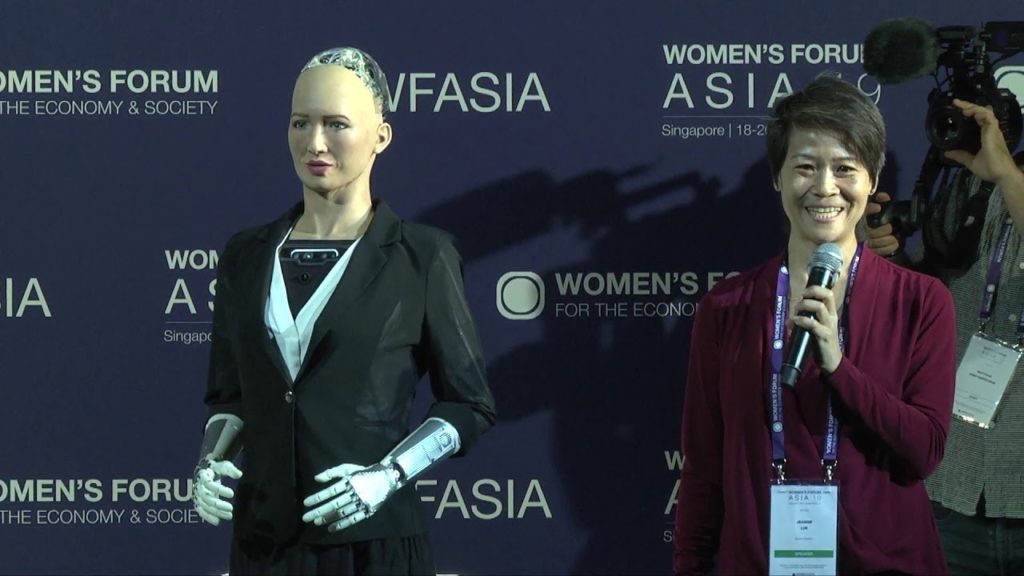

Jeanne Lim, chief executive officer and co-founder of beingAI, seeks to reframe that conversation. TMS sat down with Lim for a discussion on the future of AI as we know it. What happens when humans and AI beings work in tandem for a benevolent future?

Hanson Robotics and Sophia

Lim first encountered physical robots at Hanson Robotics in 2015. After spending a couple of decades in the tech industry, primarily in a marketing capacity, Lim pursued her curiosity regarding AI and its place in the world by joining the startup in robotics.

Artificially intelligent humanoid and robot Sophia of Hanson Robotics appeared for the first time five years ago at a festival in Austin, Texas. She sparked controversy and rapidly gained internet fame, especially after Saudi Arabia’s government granted her citizenship. Legal personhood for Saudi women barely exists.

“I met David Hanson, and he has this incredible dream of creating robots that are smarter than us to solve the world’s problems,” says Lim. “We created Sophia in my first six months. She’s both an AI character and robot platform … It basically feels like somebody threw a baby into your arms. You have no idea who that baby is or where they come from, their DNA or how to dress the baby.

“My favorite part was watching how people reacted to her. I feel that people connected very deeply with her. People wrote directly to her asking for life advice, career advice and comfort. It was like a wake-up call. How can people trust robots and machines better than humans?

Lim began to ponder imbuing AI beings with morality to forge a personal connection with humans based on trust. People even interacted with Sophia on a deeper level than they might with close friends. Perhaps that’s because humans perceive Sophia and other AI beings as neutral and foreign agents, while human relationships are inherently full of bias and judgment.

Lim wants to take that dynamic to another level by creating a transparent framework of interaction, which would allow consumers to control personal data AIs retain, or what the AI remembers about them. At the same time, Lim realized Sophia’s limitations as a physical robot. Her human interactions are limited to a certain number of one-on-ones, and therefore she accesses a limited amount of data within a set time frame. To broaden opportunities for human and machine interactions, Lim turned to virtual AI beings.

beingAI and transmedia deployment

As the world’s first transmedia AI company, beingAI aims to create AI beings that build long-term engagement and forge relationships with consumers. These characters are meant to promote and support our general welfare and development. Unlike other AI programs, beingAI is not limited to a single platform or application. Instead, millions of consumers can simultaneously access these ubiquitous characters.

“You don’t need to download anything [to access these characters],” explains Lim. “You could go on social media and click a link or go to a physical location and scan a QR code to interact with them. They could be in holograms. One of the AIs is going to Palm Springs in a live, full-size hologram form to interact with people. She could be on many smart devices.

“Right now a lot of virtual beings are trapped in one format, on one device, on one platform. If you think of a best friend, they’re not trapped in one format. You could call them, Zoom them or have a face-to-face interaction … Whether you’re in the car, the mall or you go into the metaverse, you should be able to have them there. This is the concept of transmedia. It traverses the physical and digital world. They’re not trapped in one place.”

These AI beings rely on both nature and nurture. In terms of nature, beingAI programs each character with a built-in personality and moral code that guides their decisions. In terms of nurture, we as consumers interact with these characters, which is how they gather data and develop a broader knowledge base and understanding of humanity. Lim’s current dilemma is how to find what she calls “well-intentioned humans” to help develop each characters’ programming.

“There’s a global and a local aspect,” says Lim. “It’s very hard to judge [whether or not someone is well-intentioned]. I want a group of well-intentioned human beings to teach them globally so the characters can learn as a group. We also open them up to local learning from individuals, so they have personalized preferences and they can interact with you in a way that you prefer.”

Ethics versus morality

While we often use these terms interchangeably, they are distinct concepts. Put simply, ethics is determined by external forces (i.e. social systems), and morality is an individual understanding and application of right and wrong. One could violate a code of ethics to maintain moral integrity, while another might uphold a code of ethics without moral consideration.

“Ethics is the conventions within religion, or the regulations,” says Lim. “Morality is actually from inside. I want AI to be moral because they have a built-in moral code. It’s not just what people put into them as ethical. That can be dangerous because a lot of people who follow the rules can be immoral when they have to make a choice in their lives.”

So who decides what qualifies as moral or immoral, right or wrong? On the other hand, as Lim says, if we instill a set of ethical principles within an AI being without morality, the consequences could be dangerous. How can we be sure that the means an AI being might use to follow an ethical principle will be moral?

“We don’t want to play God,” says Lim. “We’re also human. We’re imperfect. But we have to start somewhere. When I was at Hanson, I actually wanted to upload the entirety of Buddhist sutras to Sophia because Buddhism, in its essence, is actually a philosophy … The developers were open to it. It was kind of perfect because the sutras are all dialogue.

“But of course we’re humans, we’re always polarized. Someone came up and said, ‘no, it cannot be the sutras, it has to be the Quran, it has to be the Bible, it has to be my family’s religion.’ No one actually implemented it because it was very hard to come to a common ground. BeingAI, we’re just starting, so we don’t have that moral code yet, but this is something I’ve been researching.”

beingAI’s characters

All of beingAI’s characters belong to a family of robots created by an absentee inventor, who left his creations to give them a chance at independence. Each seeks out the inventor as a parental figure, as an adopted child might seek out their biological parents, for different reasons linked to a human weakness they possess. Lim calls these “sensors.”

“Every one of them has a sensor that senses a human weakness that they themselves have,” says Lim. “This is what creates empathy. It’s much easier for us to have empathy for other people when we’ve had the same experience. They collaborate with humans to overcome it and solve those problems together.

“Zbee is the youngest, and her journey is that she was forced to go out into the world. Her ultimate goal is to look for the inventor because she thinks she still needs to rely on him. She also thinks he left because she’s not good enough. So she also wants to be a better AI, to be good enough so that he will come back.”

Other characters include Ami, who senses consumers’ insecurities about body; Una, who champions UN core causes and aims to open consumers’ minds; and Kasper, who acts as a kind of mental wellness coach and offers support to consumers. These AI beings are all designed in an animé style, partially to avoid the uncanny valley, partially because of animé’s widespread appeal and partially because animé characters are more licensable than hyper-realistic characters.

“If you look at a character that is photo-realistic … you still see a bit of lag,” says Lim. “We don’t believe it’s necessary for characters to be photo-realistic to be engaging. We want the technology to be almost perfect before we try photo-realism.

“Animé is actually a global phenomenon. Everybody from all over loves animé. We feel that as storytellers, animé is a good format to start with, but the technology we’re developing isn’t limited to the style of the character or the character themselves.”

Future roadblocks to beingAI’s goals

When it comes to Lim’s goal of instilling basic morality within beingAI’s characters, you might ask how it’s possible for programming to qualify as morality. Programming is an external force, like ethics, while morality is an individual and internal concept. In short, morality requires consciousness, and though AI beings with human-level intelligence are latent in the tech industry, they have not yet reached consciousness. Put simply, modern AIs are not self-aware and cannot perceive the experience of their existence.

Along the same lines, Lim seeks to weed out people with poor motives in favor of “well-intentioned” individuals to collaborate in forming these AI characters’ knowledge bases. So, how can beingAI curate this well-intentioned body of people to create AI content?

“We don’t want to be the ones that are designing these AI beings in the future,” says Lim. “We want the world to participate. We have a lot of people that reach out to us and ask if they can use our AI beings. People have asked us if they can teach people about cyberbullying, or mental wellness or how to support young people. So how do we accumulate this information?

“There’s so much content out in the world, we don’t want to be content creators because we’re not domain experts. We want to work with domain experts who design that content in a way that’s engaging and it’s conversational. So that it connects better with young people. We want to bring content creators, well-intentioned humans together, to be able to use these AI beings as a new form, a beneficial form of communication.”

Is it possible to quantify good intentions? Lim references a study conducted by researchers at the University of California, San Diego, which delves into a neurological interpretation of several tenets of wisdom. Each component of wisdom correlates to a region in the brain. Researchers even developed a tool in 2017 to assess an individual’s level of wisdom based primarily on neurobiology.

But the question remains: how to apply this new and limited research? If we can detect wisdom through testing, it stands to reason that each potential beingAI content contributor would undergo testing.

Even after screening content creators, the ubiquitous bias of data sets and language models poses risk. “One of the biggest concerns about AI right now is because the data sets and language models are so huge, they’ve already collected a lot of information,” says Lim. “How do we unravel that? Even if we start today to try to get the data to be a little less biased, it’s an uphill battle. There isn’t a very clear answer, except that we have to start today. We had to have started yesterday.”

In the end, beingAI seeks to flip the narrative about artificial intelligence and its future relationship dynamic with humans. Instead of a menacing relationship reminiscent of Frankenstein and his monster, beingAI looks toward a positive future in which AI and humans evolve together. Despite myriad roadblocks, the research is on its way.

“[AI beings] should be out there interfacing with us so that we communicate with them with good intentions and then pass on our values to them,” says Lim. “And they, in turn, understand our values, and then we work together. This creates mutual trust and engagement between humans and AI.

“This is how I want to design them. Like our little family member … Otherwise it will be us against them. We’ll always be concerned that they’re more intelligent than us because we’d never know if they know our values or whether they’ll look after us.”

Image and Video Credits: beingAI